Putting it All Together: CVMFS In Action

Previous Section: Kubernetes and Helm: The Beauty of Orchestration

We are finally at the place where we start to see all of our hard work pay off! This is the point where we continue to automate the creation of our resources as well as connect to our cluster pods and see CVMFS in action. It is assumed that ALL of the previous sections have been read.

Why all the Automation?

Manual setup of cloud infrastructure can be both time-consuming and error-prone. Automating these tasks not only ensures consistency but also accelerates the deployment process, making your infrastructure scalable and easily reproducible. By using a script to automate the deployment of resources, you minimize the risks associated with human error and improve efficiency.

The automation provided here serves two purposes:

- Consistency: Every time you run the script, it ensures that the environment is set up in exactly the same way. This eliminates discrepancies that can arise from manual setup, where missed steps or subtle differences can lead to unexpected behavior.

- Efficiency: Manual deployment requires time and detailed knowledge of each step involved, whereas an automated script performs all actions in sequence, reducing the overall setup time. This is particularly useful when deploying multiple environments (e.g., for staging, testing, and production), or when you need to make infrastructure adjustments and roll out updates.

If you’re curious to dive deeper into the underlying processes, feel free to execute each step manually and explore the commands used in the script. Understanding what each command does will give you a solid foundation in how the cloud infrastructure is built and managed.

Deploy our EC2 Instance With Terraform

In this script, we’ll automate the setup and deployment of an EC2 instance using Terraform through a simple Bash script. This script automates key Terraform steps: initialization, validation, planning, and deployment, making the process efficient and consistent.

Prerequisites

Before running this script, ensure:

- You have a local PEM key pair (replace

YOUR_KEY_PAIR.pemwith your actual key). - Terraform is installed on your machine.

- AWS credentials are configured.

Script Overview: create.sh

The script performs the following steps:

- Set Key Permissions: Secures the PEM key for SSH access.

- Initialize Terraform: Prepares Terraform by downloading providers and setting up configuration.

- Validate Configuration: Checks for syntax and structural errors.

- Plan Deployment: Previews resources to be created or modified.

- Apply the Plan: Deploys resources as per the saved plan.

Script Example

Save this script as create.sh in your Terraform project directory:

# create.sh

chmod 400 ../YOUR_KEY_PAIR.pem # Secure the PEM key

terraform init # Initialize Terraform

terraform validate # Validate configuration

terraform plan -out=qc-microk8s-dev-plan # Save the plan

terraform apply qc-microk8s-dev-plan # Execute deployment

This script provides a reliable, automated way to deploy infrastructure using Terraform.

We can run the script by

./create.sh

It will take a few minutes to provision depending on the instance size you are using, but when its all done, you should see the following few messages.

aws_instance.microk8s_instance (remote-exec): Resolving deltas: 100% (6318/6318)

aws_instance.microk8s_instance (remote-exec): Resolving deltas: 100% (6318/6318), done.

aws_instance.microk8s_instance (remote-exec): NAME: cvmfs

aws_instance.microk8s_instance (remote-exec): LAST DEPLOYED: Mon Nov 11 21:17:34 2024

aws_instance.microk8s_instance (remote-exec): NAMESPACE: kube-system

aws_instance.microk8s_instance (remote-exec): STATUS: deployed

aws_instance.microk8s_instance (remote-exec): REVISION: 1

aws_instance.microk8s_instance (remote-exec): TEST SUITE: None

aws_instance.microk8s_instance (remote-exec): CVMFS installed successfully

aws_instance.microk8s_instance (remote-exec): Deploying CVMFS PVC

aws_instance.microk8s_instance (remote-exec): persistentvolumeclaim/cvmfs created

aws_instance.microk8s_instance (remote-exec): CVMFS PVC deployed successfully

aws_instance.microk8s_instance (remote-exec): Deploying CVMFS test pod

aws_instance.microk8s_instance (remote-exec): pod/cvmfs-demo created

aws_instance.microk8s_instance (remote-exec): CVMFS test pod deployed successfully

aws_instance.microk8s_instance: Creation complete after 2m53s [id=i-0545cfb13070491e5]

You should start to see things happening if everything is working correctly. If errors occur, please ensure that Terraform has been installed correctly and your AWS credentials are setup. We will also walk through how to get around of the issues later in this post.

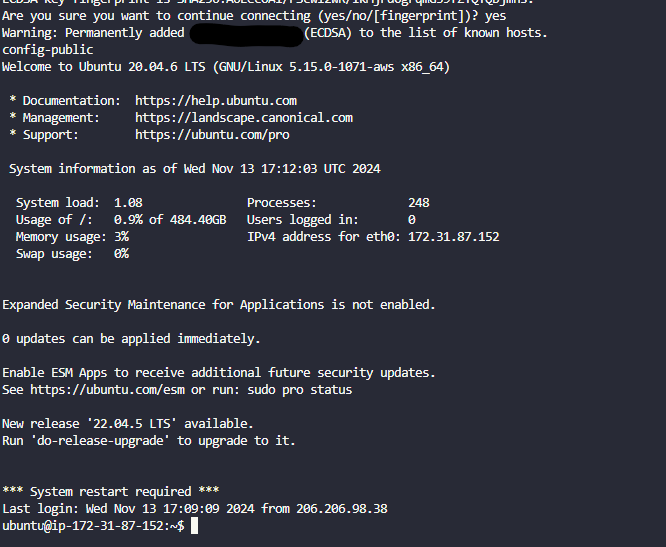

Connecting to Your MicroK8s EC2 Instance

After deploying an EC2 instance to host our MicroK8s cluster, the next step is setting up a seamless connection to manage it. This is a simple Bash script designed to connect to the EC2 instance via SSH and download the Kubernetes configuration file for local access. With this configuration file, we can use tools like Lens to manage and monitor the cluster in a user-friendly environment.

Script Overview: connect.sh

The connect.sh script automates two main tasks:

- Retrieve the EC2 Instance’s Public IP:

Using AWS CLI commands, the script identifies the public IP address of the EC2 instance by filtering based on a specified tag name (e.g.,

microk8s-dev-instance). This is particularly useful in environments with multiple instances, as it allows you to pinpoint the right one. - Download Kubernetes Config for Local Access:

Once the IP address is identified, the script uses

scpto download theconfig-publicfile from the MicroK8s instance to your local environment. This configuration allows tools like Lens to connect to the Kubernetes cluster and view all pods and resources through an intuitive interface. - Connect via SSH: Finally, the script initiates an SSH connection to the instance, providing access to the EC2 server and any further on-instance configurations.

Script Details

Let’s walk through the script. Save it as connect.sh and customize it with your own key pair path, AWS region, and instance tag name.

#!/bin/bash

# Set your AWS region

AWS_REGION="us-east-1" # Change this to the region where your EC2 instance is running

# Set your EC2 key pair path

KEY_PAIR_PATH="../devbox-key-pair.pem" # Change this to the correct path to your key pair

# Set your EC2 tag name to find the instance

INSTANCE_TAG_NAME="microk8s-dev-instance"

# Get the public IP of the instance with the tag name 'microk8s-dev-instance'

INSTANCE_PUBLIC_IP=$(aws ec2 describe-instances \

--region $AWS_REGION \

--filters "Name=tag:Name,Values=$INSTANCE_TAG_NAME" "Name=instance-state-name,Values=running" \

--query "Reservations[*].Instances[*].PublicIpAddress" \

--output text)

# Check if an IP address was found

if [ -z "$INSTANCE_PUBLIC_IP" ]; then

echo "Instance with tag name '$INSTANCE_TAG_NAME' is not running or doesn't exist."

exit 1

fi

# Connect to the instance via SSH

echo "Connecting to EC2 instance with IP: $INSTANCE_PUBLIC_IP"

scp -i "$KEY_PAIR_PATH" ubuntu@$INSTANCE_PUBLIC_IP:/home/ubuntu/.kube/config-public . # Download kube config

ssh -i "$KEY_PAIR_PATH" ubuntu@$INSTANCE_PUBLIC_IP # Connect to the instance

Ensuring Everything is Running

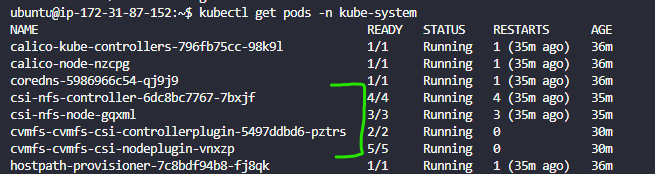

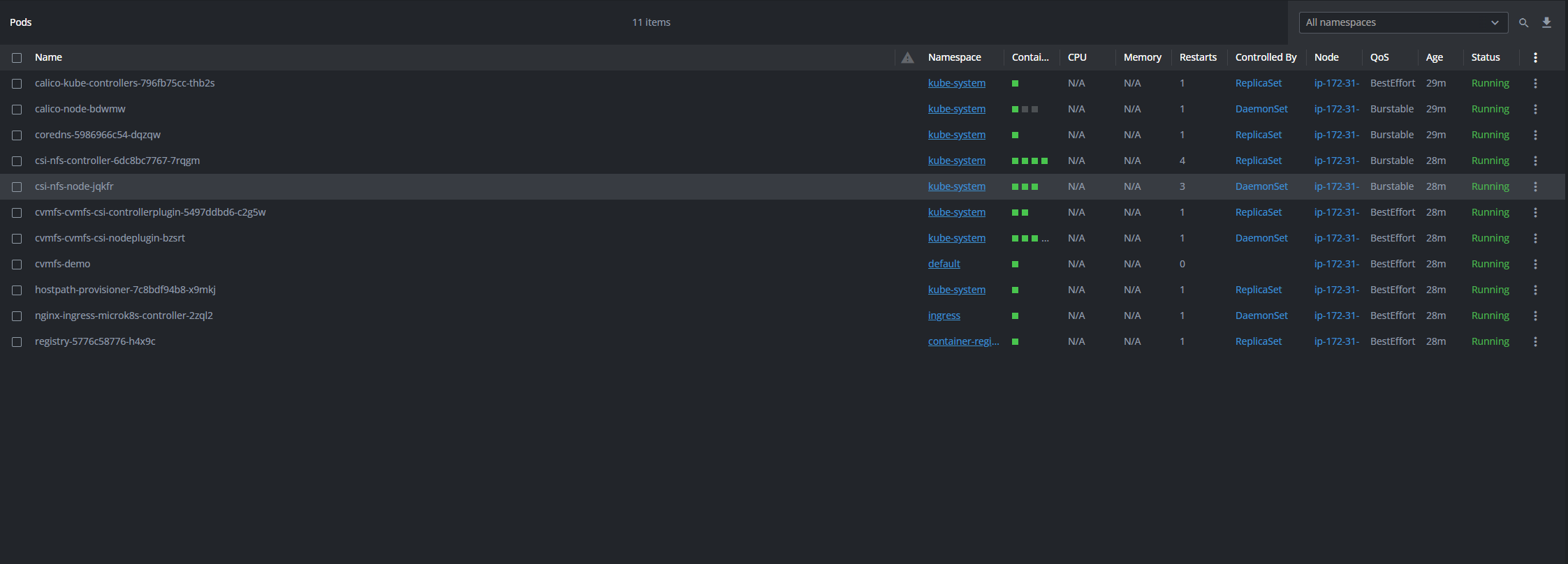

Once we create everything with create.sh and connect to the instance using connect.sh, we should be able to see the following

Check to see if all the required pods are running

kubectl get pods -n kube-system

You should see the following

and

kubectl get pods

By downloading the Kubernetes config-public file to your local machine, you can use Lens or similar Kubernetes management tools to gain a graphical view of your cluster. This simplifies monitoring and managing your pods, services, and resources, offering a clearer and more accessible experience than using command-line tools alone.

We can also see the config-public in our current working directory in the docker container, we can copy and paste the config into Lens to connect to it. We will then be able to see the NFS and CVMFS drivers up and running.

Issues

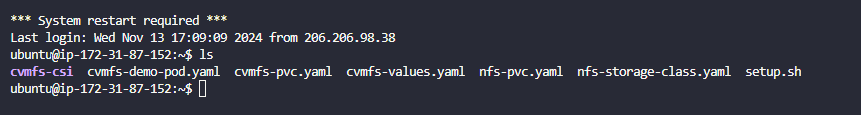

If you DO NOT see the CVMFS drivers OR the Test Pod running, then there may have been an issue with the remote provisioning to get all the drivers up and running. This is a common occurrence so you can also do the following

- Check to see if the repo is in the current directory by doing

ls

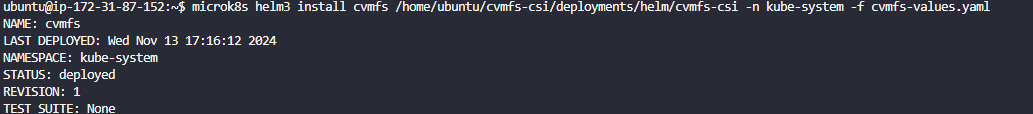

- Enter the following command to manually install the CVMFS Drivers

microk8s helm3 install cvmfs /home/ubuntu/cvmfs-csi/deployments/helm/cvmfs-csi -n kube-system -f cvmfs-values.yaml

- You should see the following

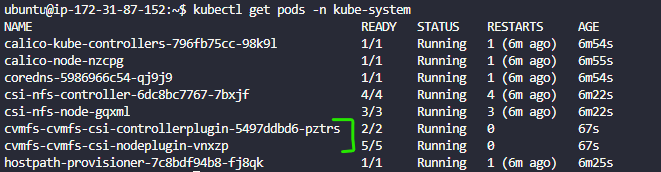

- You can check to see if the pods are running by doing

kubectl get pods -n kube-system

And you should see the following

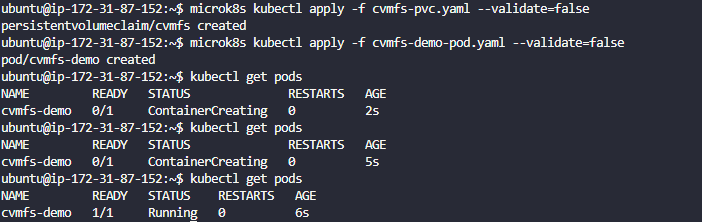

- Now deploy the PVC and the Test Pod by doing the following in the command prompt

microk8s kubectl apply -f cvmfs-pvc.yaml --validate=false

microk8s kubectl apply -f cvmfs-demo-pod.yaml --validate=false

and you should now see once the container is created

The Main Event

This is the moment we have all been waiting for, all of the tireless config creations and changes and head banging, we can finally see if everything is configured properly. If you followed every step in the guide and used all of the scripts we have provided, there is a 99% chance that this just all works (We are very sorry for the 1%, please do reach out if you require help)

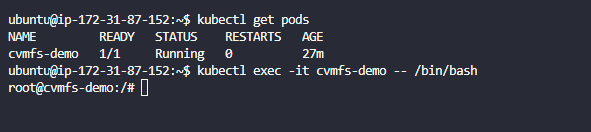

- Let’s SSH into the demo pod by running

kubectl exec -it cvmfs-demo -- /bin/bash

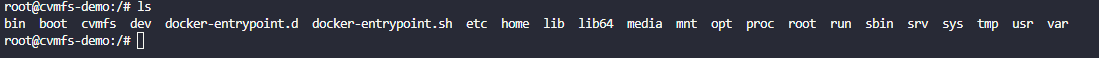

- Do an

lsto make sure you have the/cvmfsfolder in your root

cdinto the/cvmfsfolder- Do another

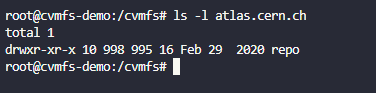

lsto see if anything is there (There should not be) - BUT now we can do

ls -l atlas.cern.ch- What is

atlas.cern.ch, you can read more about the Atlas project here https://atlas.cern/Discover/Detector

- What is

- AND BOOM, we see something

- We can now go into the folder and have access to all the resources found in this repository

root@cvmfs-demo:/cvmfs# ls

atlas.cern.ch cvmfs-config.cern.ch

root@cvmfs-demo:/cvmfs# cd atlas.cern.ch/

root@cvmfs-demo:/cvmfs/atlas.cern.ch# ls

repo

root@cvmfs-demo:/cvmfs/atlas.cern.ch# cd repo/

root@cvmfs-demo:/cvmfs/atlas.cern.ch/repo# ls

ATLASLocalRootBase benchmarks conditions containers dev sw test tools tutorials

root@cvmfs-demo:/cvmfs/atlas.cern.ch/repo#

What Just Happened?

How did our pod go from empty to housing a repository full of tools for the ATLAS project? That’s the power of CVMFS at work. The CVMFS drivers we installed earlier are responsible for managing these dynamic volumes. When we access the /cvmfs mount, it signals the drivers to retrieve the requested resources. The drivers, with the help of FUSE (Filesystem in Userspace), seamlessly pull in the data needed for the repository, giving us instant access to specialized tools and data.

So What’s Next?

Now that we’ve accessed the ATLAS project’s repository through CVMFS, you might be wondering how this setup could benefit our own projects. Imagine having an instantly accessible, version-controlled library of tools, datasets, and configurations that’s always up-to-date and readily available to anyone who needs it. This is the power of CVMFS. It’s not only useful for accessing existing repositories like ATLAS but also for hosting and sharing your own resources.

How Can We Use This for Our Own Projects?

CVMFS allows us to create and manage custom repositories where we can package our own tools, scripts, datasets, and even entire software environments. By hosting these resources on a CVMFS repository, we can ensure consistency across development and production environments and make collaboration smoother for teams working with large or complex data.

In the next part of our series, we’ll explore a live use case: The Galaxy Project. This project leverages CVMFS to manage and distribute bioinformatics tools, datasets, and workflows. We’ll dive into how Galaxy uses CVMFS to ensure that researchers have access to the latest tools and resources, and how you can apply similar principles to streamline and scale your own projects. Stay tuned!

Optional: Teardown of Instance

Here is a small script to tear down your entire EC2 instance once you are done practicing. You can use this in any terraform setup

#!/bin/bash

echo "Destroying Terraform-managed resources..."

terraform init

terraform destroy -auto-approve

# Optional: Remove all terraform state files

rm -f terraform.tfstate

rm -f terraform.tfstate.backup

rm -f terraform.tfstate.lock.info

rm -f .terraform.lock.hcl

rm -rf .terraform

echo "Terraform resources destroyed."

Repo for FULL Setup https://github.com/lablytics/cvmfs-csi/tree/master/devops

Next Section: Use Case: The Galaxy Project