Use Case: The Galaxy Project

Previous Section: Putting it All Together: CVMFS In Action

Galaxy Project

In the era of big data, researchers across scientific disciplines require robust, scalable, and user-friendly platforms to analyze complex datasets. The Galaxy Project is an open-source, web-based computational workbench designed to empower researchers by providing accessible, reproducible, and transparent data analysis. Originally developed for bioinformatics and genomics, Galaxy has since evolved into a general-purpose platform for a wide range of scientific workflows, spanning domains such as climate science, cheminformatics, and machine learning.

At its core, Galaxy simplifies complex computational tasks by offering an interactive graphical user interface (GUI), eliminating the need for researchers to have deep programming knowledge. It also supports workflow automation, allowing users to build and share reproducible pipelines effortlessly. Galaxy’s extensibility is powered by a rich ecosystem of tools, a thriving community, and integrations with cloud and high-performance computing (HPC) environments.

With deployments ranging from local installations to large-scale Kubernetes-based infrastructures, Galaxy ensures that researchers can harness computational power efficiently, regardless of their expertise level. Whether running on personal servers, institutional clusters, or public clouds, Galaxy facilitates seamless collaboration, data sharing, and robust computational analysis.

In this post, we will use the skills we’ve gained to set up CVMFS drivers and allow us to set up a cluster that runs the Galaxy Project and process some demo data.

Chart Resources

If you have not read the previous blog posts, it is strongly advised that you do in order to gain a strong understanding of the DevOps technologies we are using to deploy this project.

Terraform Files

We will continue to use our main.tf as the backbone to the operations of deploying an EC2 Instance and Provisioning it using Terraform. In this file, we will also add an elastic IP that will remain static so that if we need to take down the instance and bring it back up, the IP will still remain the same to point to the Galaxy project webpage. Another major difference is that we will be running our provisioning scripts after the EIP is assigned, this is due to the fact that we require the IP address in order to connect to the cluster locally via Lens.

provider "aws" {

region = "us-east-1"

}

data "http" "myip" {

url = "https://ipv4.icanhazip.com"

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

owners = ["099720109477"]

}

resource "aws_security_group" "microk8s_sg" {

name = "microk8s-sg"

description = "Security group for MicroK8s EC2 instance"

vpc_id = var.vpc_id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = [var.your_ip, "${chomp(data.http.myip.response_body)}/32"]

description = "Allow SSH access from your public IP"

}

ingress {

from_port = 16443

to_port = 16443

protocol = "tcp"

cidr_blocks = [var.your_ip, "${chomp(data.http.myip.response_body)}/32"]

description = "Allow K8s access from your public IP"

}

ingress {

from_port = 8080

to_port = 8080

protocol = "tcp"

cidr_blocks = [var.your_ip, "${chomp(data.http.myip.response_body)}/32"]

description = "Allow port-forward access to Galaxy service"

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = [var.your_ip, "${chomp(data.http.myip.response_body)}/32"]

description = "Allow HTTP access on port 80"

}

# Allow access to the NodePort (e.g., 32000)

ingress {

from_port = var.web_node_port

to_port = var.web_node_port

protocol = "tcp"

cidr_blocks = [var.your_ip, "${chomp(data.http.myip.response_body)}/32"]

description = "Allow public access to NodePort service"

}

}

# Step 1: Allocate the Elastic IP

resource "aws_eip" "microk8s_eip" {

domain = "vpc"

}

resource "aws_instance" "microk8s_instance" {

ami = data.aws_ami.ubuntu.id

instance_type = var.instance_type

subnet_id = var.subnet_id

vpc_security_group_ids = [aws_security_group.microk8s_sg.id]

key_name = var.key_name

root_block_device {

volume_size = 500

volume_type = "gp2"

}

tags = {

Name = "microk8s-dev-instance"

}

}

resource "aws_eip_association" "microk8s_eip_assoc" {

instance_id = aws_instance.microk8s_instance.id

allocation_id = aws_eip.microk8s_eip.id

}

resource "null_resource" "post_eip_provisioning" {

depends_on = [aws_eip_association.microk8s_eip_assoc]

provisioner "file" {

source = "${path.module}/setup.sh"

destination = "/home/ubuntu/setup.sh"

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.private_key_path)

host = aws_eip.microk8s_eip.public_ip

}

}

provisioner "file" {

source = "${path.module}/cvmfs-values.yaml"

destination = "/home/ubuntu/cvmfs-values.yaml"

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.private_key_path)

host = aws_eip.microk8s_eip.public_ip

}

}

provisioner "file" {

source = "${path.module}/galaxy-pvc.yaml"

destination = "/home/ubuntu/galaxy-pvc.yaml"

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.private_key_path)

host = aws_eip.microk8s_eip.public_ip

}

}

provisioner "file" {

source = "${path.module}/galaxy-values.yaml"

destination = "/home/ubuntu/galaxy-values.yaml"

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.private_key_path)

host = aws_eip.microk8s_eip.public_ip

}

}

provisioner "file" {

source = "${path.module}/galaxy-services.sh"

destination = "/home/ubuntu/galaxy-services.sh"

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.private_key_path)

host = aws_eip.microk8s_eip.public_ip

}

}

provisioner "remote-exec" {

inline = [

"sudo bash /home/ubuntu/setup.sh",

"sudo chmod +x /home/ubuntu/galaxy-services.sh",

]

connection {

type = "ssh"

user = "ubuntu"

private_key = file(var.private_key_path)

host = aws_eip.microk8s_eip.public_ip

}

}

}

Routing (Optional)

To simplify access to our Galaxy Project instance, we will configure a domain name, galaxy.lablytics.com, for easy navigation.

To achieve this, you will need to create a hosted zone within your DNS provider. Alternatively, you can define the hosted zone directly in your infrastructure code for a more automated setup.

routes.tf

#Route 53 A Record for NodePort service using the Elastic IP

data "aws_route53_zone" "zone" {

name = "lablytics.com"

}

resource "aws_route53_record" "nodeport_service" {

zone_id = data.aws_route53_zone.zone.zone_id

name = var.domain_name

type = "A"

ttl = 300

records = [aws_eip.microk8s_eip.public_ip]

}

resource "aws_route53_record" "www_nodeport_service" {

zone_id = data.aws_route53_zone.zone.zone_id

name = "www.${var.domain_name}"

type = "A"

ttl = 300

records = [aws_eip.microk8s_eip.public_ip]

}

Setting Up the Provisioning Process

To provision and configure the CVMFS drivers, we will use the setup.sh script, which follows the standard approach outlined in the official CVMFS driver tutorials. This script ensures that the necessary drivers are installed and ready for use. You can find our version of the setup script in our public repository here: [TODO: Insert link].

Provisioning Galaxy Services

We will create a galaxy-services.sh script that will be executed directly within the EC2 instance. This approach is necessary because performing Helm updates over an SSH connection initiated by Terraform can sometimes result in connectivity issues.

The script itself is straightforward—it installs CVMFS and deploys the Galaxy Project using predefined configuration values. By running this script within the EC2 instance, we ensure a more reliable provisioning process without encountering SSH-related limitations.

function install_cvmfs(){

echo "Installing CVMFS on K8s..."

git clone https://github.com/lablytics/cvmfs-csi /home/ubuntu/cvmfs-csi

microk8s helm3 install cvmfs /home/ubuntu/cvmfs-csi/deployments/helm/cvmfs-csi -n kube-system -f /home/ubuntu/cvmfs-values.yaml

echo "CVMFS installed successfully"

sleep 120

}

function install_galaxy(){

echo "Installing Galaxy on K8s..."

microk8s kubectl create namespace galaxy

microk8s kubectl apply -f /home/ubuntu/galaxy-pvc.yaml

echo "Sleeping for 15 seconds..."

sleep 15

echo "Waking up..."

# Install Galaxy

git clone https://github.com/galaxyproject/galaxy-helm.git /home/ubuntu/galaxy-helm

cd /home/ubuntu/galaxy-helm/galaxy

git checkout 2557baac75ee56c8c9801946c9ee8d633db7d56e # Checkout to the version that works with the current Galaxy version

sudo microk8s helm3 dependency update

microk8s helm3 install -n galaxy galaxy-project /home/ubuntu/galaxy-helm/galaxy -f /home/ubuntu/galaxy-values.yaml --timeout 1m30s

echo "Galaxy installed successfully"

}

function main(){

install_cvmfs

echo "Sleeping for 30 seconds..."

sleep 30

echo "Waking up..."

install_galaxy

}

main

Value Files

We will need to update our cvmfs-values.yaml file as well as create a galaxy-values.yaml configuration file for the Galaxy deployment. As you’ll notice in the cvmfs-values.yaml , there are specific galaxy repositories here that we will require access to.

# cvmfs-values.yaml

extraConfigMaps:

cvmfs-csi-default-local:

default.local: |

CVMFS_HTTP_PROXY="DIRECT"

CVMFS_QUOTA_LIMIT="4000"

CVMFS_USE_GEOAPI="yes"

CVMFS_AUTOFS_TIMEOUT=3600

CVMFS_DEBUGLOG=/tmp/cvmfs.log

{{- if .Values.cache.alien.enabled }}

CVMFS_ALIEN_CACHE={{ .Values.cache.alien.location }}

# When alien cache is used, CVMFS does not control the size of the cache.

CVMFS_QUOTA_LIMIT=-1

# Whether repositories should share a cache directory or each have their own.

CVMFS_SHARED_CACHE=no

{{- end -}}

cvmfs-csi-config-d:

data.galaxyproject.org.conf: |

CVMFS_SERVER_URL="http://cvmfs1-iu0.galaxyproject.org/cvmfs/@fqrn@;http://cvmfs1-tacc0.galaxyproject.org/cvmfs/@fqrn@;http://cvmfs1-psu0.galaxyproject.org/cvmfs/@fqrn@;http://cvmfs1-mel0.gvl.org.au/cvmfs/@fqrn@;http://cvmfs1-ufr0.galaxyproject.eu/cvmfs/@fqrn@"

CVMFS_PUBLIC_KEY="/etc/cvmfs/config.d/data.galaxyproject.org.pub"

data.galaxyproject.org.pub: |

-----BEGIN PUBLIC KEY-----

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEA5LHQuKWzcX5iBbCGsXGt

6CRi9+a9cKZG4UlX/lJukEJ+3dSxVDWJs88PSdLk+E25494oU56hB8YeVq+W8AQE

3LWx2K2ruRjEAI2o8sRgs/IbafjZ7cBuERzqj3Tn5qUIBFoKUMWMSIiWTQe2Sfnj

GzfDoswr5TTk7aH/FIXUjLnLGGCOzPtUC244IhHARzu86bWYxQJUw0/kZl5wVGcH

maSgr39h1xPst0Vx1keJ95AH0wqxPbCcyBGtF1L6HQlLidmoIDqcCQpLsGJJEoOs

NVNhhcb66OJHah5ppI1N3cZehdaKyr1XcF9eedwLFTvuiwTn6qMmttT/tHX7rcxT

owIDAQAB

-----END PUBLIC KEY-----

automountHostPath: /cvmfs

kubeletDirectory: /var/snap/microk8s/common/var/lib/kubelet

automountStorageClass:

create: true

name: cvmfs

# galaxy-values.yaml

cvmfs:

deploy: false

persistence:

existingClaim: "galaxy-pvc"

ingress:

enabled: true

ingressClassName: nginx

annotations:

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

path: /

hosts:

- host: ~

paths:

- path: "/"

- path: "/training-material"

Putting it all together

Now we can create the EC2 instance by running the .create.sh script that we previously wrote. This will tell Terraform to initialize, create a plan, validate it and finally create our AWS resources required to deploy the Galaxy Project.

#!/bin/bash

terraform init

terraform validate

terraform plan -out=qc-microk8s-dev-plan

terraform apply qc-microk8s-dev-plan

Once our instance has been successfully created and provisioned, we can establish an SSH connection using the connect.sh script.

In this iteration, we’ve made a small but impactful change from the previous tutorial: the Kubernetes configuration (kubeconfig) is now copied to a location that Lens can automatically sync with. This is achieved by setting up a mount from our local system—where Lens expects the configuration—to the Docker DevOps container.

As a result, when we transfer the kubeconfig from the EC2 instance to the Docker container using scp, the mount ensures that our local machine also has direct access to the file. This streamlines the workflow, eliminating the need for additional manual steps.

#!/bin/bash

# Set your AWS region

AWS_REGION="us-east-1" # Change this to the region where your EC2 instance is running

# Set your EC2 key pair path

KEY_PAIR_PATH="../devbox-key-pair.pem" # Change this to the correct path to your key pair

# Set your EC2 tag name to find the instance

INSTANCE_TAG_NAME="microk8s-dev-instance"

# Get the public IP of the instance with the tag name 'microk8s-dev-instance'

INSTANCE_PUBLIC_IP=$(aws ec2 describe-instances \

--region $AWS_REGION \

--filters "Name=tag:Name,Values=$INSTANCE_TAG_NAME" "Name=instance-state-name,Values=running" \

--query "Reservations[*].Instances[*].PublicIpAddress" \

--output text)

# Check if an IP address was found

if [ -z "$INSTANCE_PUBLIC_IP" ]; then

echo "Instance with tag name '$INSTANCE_TAG_NAME' is not running or doesn't exist."

exit 1

fi

# Connect to the instance via SSH

echo "Connecting to EC2 instance with IP: $INSTANCE_PUBLIC_IP"

scp -i "$KEY_PAIR_PATH" ubuntu@$INSTANCE_PUBLIC_IP:/home/ubuntu/.kube/config-public /configs/microk8s-config

ssh -i "$KEY_PAIR_PATH" ubuntu@$INSTANCE_PUBLIC_IP

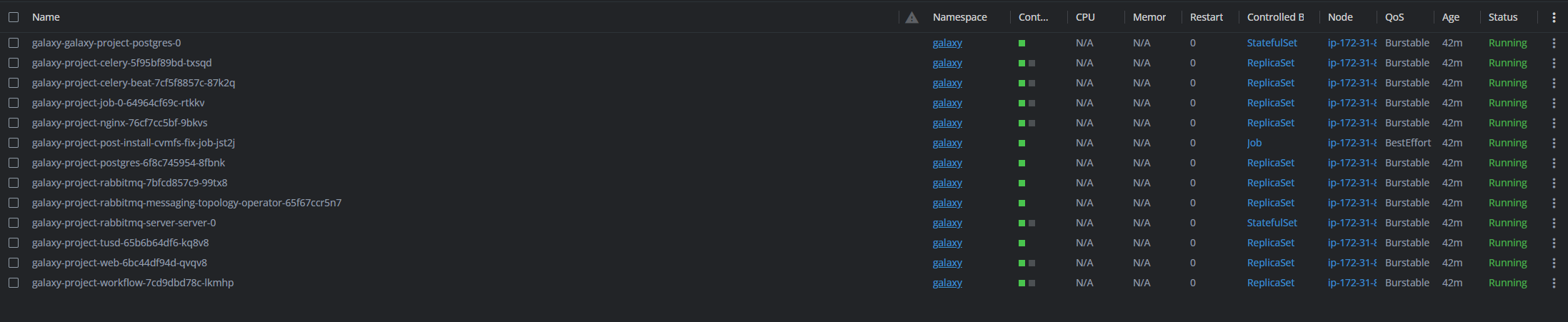

Once you are connected to the instance, you should be able to run the ./galaxy-services.sh script which installs everything you need. This does take a few minutes (around 5 or so), but once everything is running, you should see something like this

Lens

CLI

ubuntu@ip-172-31-85-199:~/galaxy-helm/galaxy$ kubectl get pods -n galaxy

NAME READY STATUS RESTARTS AGE

galaxy-galaxy-project-postgres-0 1/1 Running 0 41m

galaxy-project-celery-5f95bf89bd-txsqd 1/1 Running 0 41m

galaxy-project-celery-beat-7cf5f8857c-87k2q 1/1 Running 0 41m

galaxy-project-job-0-64964cf69c-rtkkv 1/1 Running 0 41m

galaxy-project-nginx-76cf7cc5bf-9bkvs 1/1 Running 0 41m

galaxy-project-post-install-cvmfs-fix-job-jst2j 1/1 Running 0 41m

galaxy-project-postgres-6f8c745954-8fbnk 1/1 Running 0 41m

galaxy-project-rabbitmq-7bfcd857c9-99tx8 1/1 Running 0 41m

galaxy-project-rabbitmq-messaging-topology-operator-65f67ccr5n7 1/1 Running 0 41m

galaxy-project-rabbitmq-server-server-0 1/1 Running 0 41m

galaxy-project-tusd-65b6b64df6-kq8v8 1/1 Running 0 41m

galaxy-project-web-6bc44df94d-qvqv8 1/1 Running 0 41m

galaxy-project-workflow-7cd9dbd78c-lkmhp 1/1 Running 0 41m

Going through the demo

In order to ensure everything is working correct, follow the tutorial provided by Galaxy

First, in order to access the web UI, we need to do one of the following.

- Forward SSH Tunnel to the WebUI Pod

- Go to the IP address of the EC2 Instance

- Go to the assigned domain name of the EC2 Instance

Forward SSH Tunnel

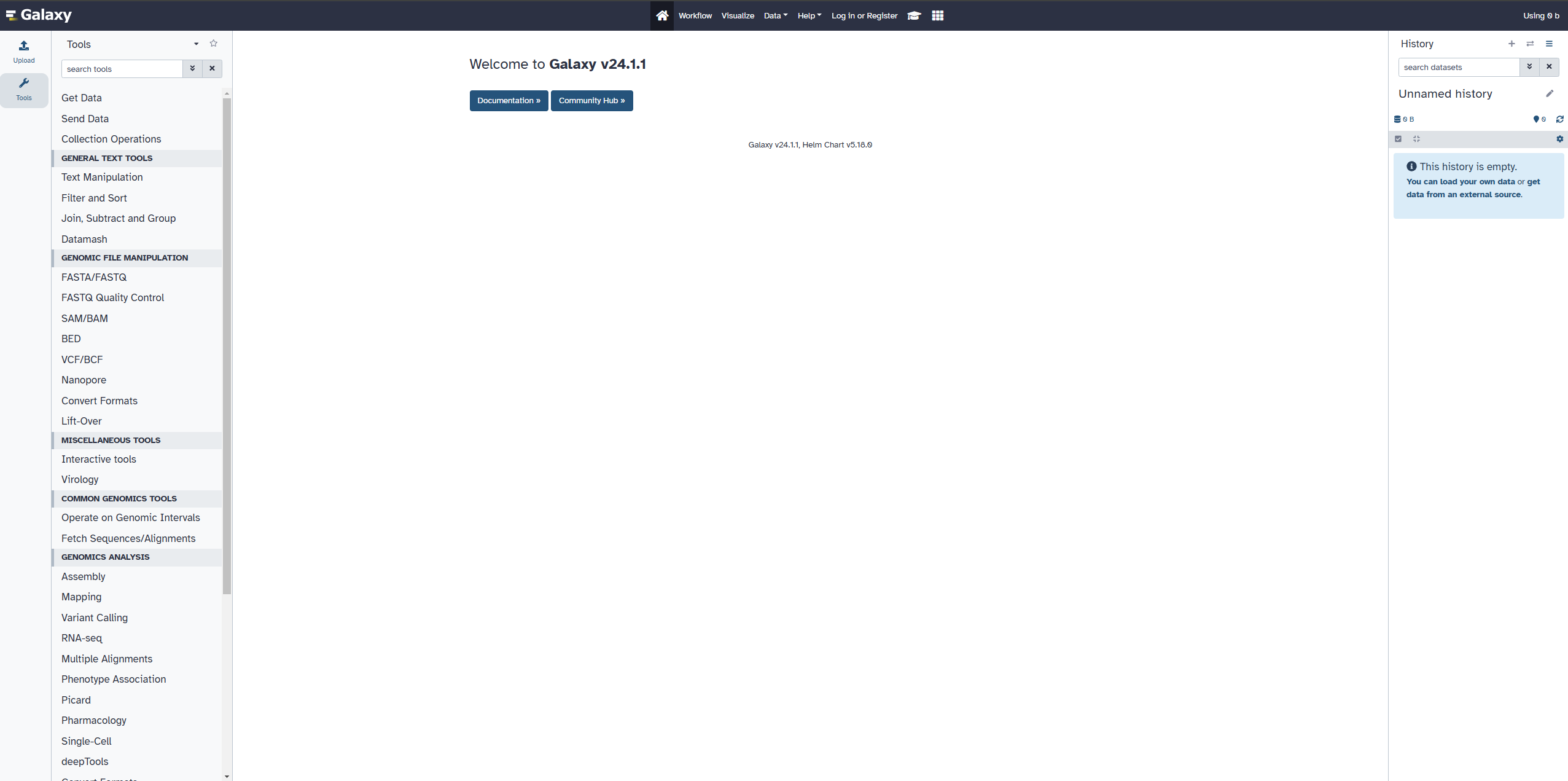

Lens

In Lens, go to the galaxy-project-web-... pod, and open it. If you scroll down to Containers, then Port, you should see that the container is running on port 8080. There is a blue Forward… button that allows you to port forward to the pod instance and access it. Once you set that up, you should be able to view http://localhost:[ASSIGNED_PORT]/ and see the Galaxy UI.

CLI

You can run the following command to port forward

kubectl port-forward pod/<POD_NAME> <LOCAL_PORT>:8080 -n galaxy

If you are able to reach the UI, you should see something like

If you are able to get through the entire demo, then you are done and have everything set up!

Conclusion

By following this guide, we have successfully deployed the Galaxy Project on an EC2 instance using Terraform, Kubernetes, Helm, and CVMFS. This setup provides a scalable and reproducible environment for researchers and data scientists to leverage Galaxy’s powerful workflow automation and computational capabilities.

We began by provisioning our infrastructure with Terraform, ensuring that our EC2 instance was correctly configured with an Elastic IP for persistent access. We then deployed CVMFS drivers, enabling efficient data access across distributed environments, and installed Galaxy within a Kubernetes cluster. Through the use of Helm charts and predefined configuration files, we automated the deployment process, ensuring a seamless setup.

To simplify access, we configured a hosted domain name and explored different methods to connect to the Galaxy Web UI, including port forwarding via SSH, direct access through the instance’s public IP, and routing through a custom domain. Once everything was set up, we validated our deployment by running a sample workflow from the Galaxy training materials, confirming that the system was operational.

With this foundation in place, you now have a fully functional Galaxy instance running in a cloud-based, containerized environment. This setup not only enables high-performance data analysis but also ensures scalability, reproducibility, and ease of collaboration. Whether you’re using Galaxy for bioinformatics, cheminformatics, climate science, or machine learning, this deployment provides the flexibility and power needed for modern scientific computing.

Now that your Galaxy instance is up and running, you can start exploring its vast ecosystem of tools and workflows. If you encounter any issues, feel free to reach out.

Code Reference

https://github.com/lablytics/cvmfs-csi/tree/master/devops/cvmfs-full-setup-basic-galaxy